Paper Review: Visual Captions: Augmenting Verbal Communication with On-the-fly Visuals

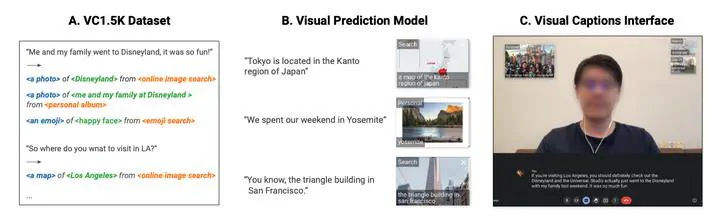

Problem

- Input: continuous stream of conversation

- During the conversation, limited cognitive resources to interact with AI prompts

- Without a real-time system deployed, it is difficult to study how people could interact with and benefit from visuals

Solution

synchronous human-human verbal communication

- Automatically predicts the “visual intent” of a conversation

- The visuals that people would like to show at the moment of their conversation

- Suggests them for users to immediately select and display

Design Space

Temporal:

- Synchronous

- Users select

- Asynchronous

- Set up corresponding visuals before

- Select and edit visuals after the text is composed

Subject:

- By the speaker to express their ideas (visualize their own speech)

- By the listener to understand others (visualize others’ speech)

- Support both subjects and allow all parties to visually supplement their own speech and ideas

Visual:

- Visual Content - what information to be visualized?

- Disambiguate the most critical and relevant information to visualize in the current context

- Visual Type - how should the visual be presented?

- Ranging from abstract to concrete

- Visual Source - where the visual should be retrieved from?

- From personal and public assets

Scale & Space:

- One-to-one

- One-to-many

- Many-to-many

Privacy

- Privately shown visuals are only presented to the speaker

- Publicly shown visuals are presented to everyone in the conversation

- The visuals can be selectively presented to a subset of audiences.

Initiation

- Proactively providing visual augmentations without user interaction

- On-demand-suggest

- Auto-suggest

- Auto-display

Interaction

- Speech

- Gesture

- Body pose

- Facial expression

- Gaze

- Custom input devices